The EU’s landmark AI Act is changing the rules of the road for software vendors that embed AI into products and services. For SaaS vendors, the Act is not a theoretical policy exercise — it forces concrete lifecycle changes: classification of products by risk, ongoing monitoring, documentation requirements, incident reporting, human oversight, and data-governance obligations. This playbook translates the Act and the latest 2025 guidance into a practical compliance roadmap. It answers the central question every product and engineering leader faces: what must my team actually build, document, and run to ship AI-powered features into Europe — without breaking product velocity.

Key takeaways up front:

-

The AI Act categorizes AI uses and imposes stricter obligations on “high-risk” systems; obligations include risk management, documentation, logging, human oversight, and traceability.

-

The regulatory calendar is phased: some prohibitions and transparency rules are already in force and other obligations (especially those for high-risk systems and general-purpose AI guidance) have staged compliance windows—vendors must track these timelines closely.

-

Compliance is cross-functional: product, engineering, legal, privacy, security, and sales must collaborate to produce artifacts regulators will expect: model cards, data provenance, risk assessments, incident reports, and operational logs.

-

Treat compliance as productized engineering work: instrument systems to generate the required documentation automatically, embed guardrails in runtime, and bake monitoring into SLOs and on-call processes.

This article is a practical, step-by-step guide for SaaS vendors in 2025. It covers classification and scoping, technical controls, documentation artifacts, incident handling, GDPR interplay, operational roles, and a 90-day roadmap to move from discovery to baseline compliance.

1 — Understand the scope: classification, risk tiers, and who is regulated

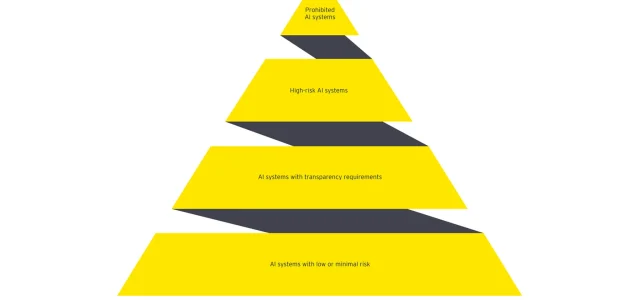

The first compliance task is accurate scoping. The AI Act defines obligations based on how an AI system is used, where it is deployed, and whether it is “high-risk.” High-risk systems include certain listed sectors and systems enumerated in Annex III (for example those that affect safety or fundamental rights) and systems that are safety components of products already regulated by EU law. SaaS vendors must classify each product/feature — not just the underlying model — and document that classification process.

General-purpose AI (GPAI) models and foundation models attract special attention because they power many SaaS features (chat, summarization, drafting). The Commission has issued guidance to clarify definitions and obligations that apply to GPAI providers and downstream deployers; vendors should follow those guidelines to determine whether obligations attach to the model provider, the SaaS vendor, or both. The lines can be subtle: a model provider might face obligations on training and model documentation while the SaaS vendor must show how the model is used, what data it processes, and how it mitigates risks.

Action: run a classification workshop this week. Inventory features that use AI, map each to the Act’s categories (prohibited, limited-risk, high-risk, or minimal risk), and create a short written rationale per feature.

2 — The minimal compliance artifacts every SaaS vendor must produce

Regulators expect evidence. A compliant SaaS vendor should be able to produce the following artifacts on demand; many of these must exist before deployment or at first-use in the EU.

Model card / product card. A concise but versioned document describing model identity, intended purpose, limitations, training data summary, performance metrics, and known risks. Model cards form the public face of governance and help buyers and auditors understand intended usage.

Technical documentation. Detailed internal docs showing design, architecture diagrams, data flows, training and validation datasets summaries, pre-processing steps, prompt templates, hyperparameters, and testing results. For high-risk systems this needs to be more comprehensive.

Risk assessment & mitigation plan. Lifecycle risk management: identification of foreseeable harms, likelihood/impact scoring, mitigation steps implemented, and acceptance criteria. This should be a living artifact, updated with releases and incidents.

Logging & traceability records. Automated logs that tie user requests to model version, prompt fingerprint, retrieval document IDs (if RAG), tokens in/out, safety filters triggered, and output hashes. Logs must support incident investigation and regulator audits.

Human oversight policy. Documented procedures for human review (when required), escalation paths, and criteria for when outputs must be blocked or escalated into manual workflows.

Incident reporting playbook. A playbook with thresholds for “serious incidents” and templates for reporting to national authorities as required by the Act; it should include roles, timelines, and predefined communications.

Data governance summary. High-level description of personal data used during training, measures for minimization and anonymization, lawful basis for processing, and data retention policies—critical for GDPR intertwining.

Action: build templates for each artifact and integrate them into your CI/CD so each release yields an updated model card, risk summary, and versioned logs automatically.

3 — Mapping GDPR onto the AI Act: personal data and lawful basis

SaaS vendors must run two parallel compliance tracks: AI Act obligations and GDPR obligations. These interact heavily where models process personal data (training, fine-tuning, or inference). The European Data Protection Board (EDPB) has published opinions and guidance on AI and GDPR considerations; obeying GDPR remains essential and often shapes the technical mitigations required under the AI Act.

Key GDPR considerations:

-

Identify lawful basis for processing personal data used in model development or inference (consent, contract, legitimate interests). Maintain records of processing activities.

-

Where models process special categories of data, assess whether processing is permissible and document safeguards.

-

Implement rights-by-design: allow data subjects to exercise access, correction, deletion, and portability where applicable, and document how ML artifacts respond to these requests.

-

DPIAs (Data Protection Impact Assessments) will often be necessary where model outputs could affect rights — integrate DPIA outputs into the risk assessment required by the AI Act.

Action: run a GDPR+AI triage: which models use personal data? For each, document lawful basis and trigger a DPIA when outputs can materially affect a person’s rights or wellbeing.

4 — Technical controls: instrument, guard, and monitor

To satisfy auditors and reduce risk, SaaS vendors must implement specific technical controls. Think of these as engineering requirements driven by regulation.

Automatic provenance & logging. Every model call should automatically record: timestamp, anonymized requester id, input fingerprint, model id and version, embedder version, retrieval document ids, tokens in/out, safety flags, and a response hash. Logs must be tamper-resistant and retained per policy. These fields are the minimum dataset regulators will request during an audit.

Prompt and prompt-template versioning. Treat prompts as code: store them in a registry, tag versions, and require code review before changing templates that affect safety properties.

Safety filters & content redaction. Implement pre- and post-generation filters: pre-filter sensitive inputs, and post-scan outputs for disallowed content or privacy leakage. Ensure the filtering chain is logged and reversible for audits.

RAG controls. If the product uses retrieval-augmented generation, enforce vetting, indexing controls, retention windows, canaries in private corpora (to detect exfiltration), and provenance markers that allow outputs to be traced to source documents.

Semantic monitoring. Beyond uptime and latency, monitor hallucination metrics, toxicity/scoring, instruction compliance, drift in model quality, and statistical anomalies in outputs. Flagging must trigger human review processes.

Explainability & user notices. For transparency obligations, provide meaningful explanations where required: indicate when outputs are AI-generated, provide summary of the data sources used (high level), and offer user-facing controls where appropriate.

Action: prioritize instrumentation that can be implemented with little UX change: robust logs, prompt registries, and a post-generation output scanner pipeline.

5 — Incident management and reporting

The AI Act imposes an obligation to report “serious incidents” (e.g., harms causing significant impact) to national authorities within defined timeframes. Prepare now: waiting for a real incident is too late.

Define what’s a serious incident. Examples include data exfiltration via model outputs, errors causing financial harm, systemic bias resulting in discriminatory decisions, or security breaches exposing model weights or training data. Map incident types to severity levels and regulatory reporting requirements.

Incident response playbook. Create runbooks covering detection, containment, eradication, recovery, evidence preservation (immutable logs), user notification, and regulator reporting. Pre-draft reporting templates that include timeline, mitigations, affected cohorts, and root-cause hypotheses.

Testing & tabletop exercises. Run quarterly drills that simulate a model hallucination that leaks PII, or a jailbreak that propagates misinformation. Use drills to exercise cross-functional communication (product/legal/PR/security) and ensure the reporting cadence can be met.

Action: implement an “incident to regulator” workflow in your ticketing/IR system that auto-populates required fields from production logs and escalates to legal and senior ops.

6 — Organization & roles: build an LLMOps + Compliance team

Compliance is not a single person’s job. The right structure reduces the operational friction of meeting regulatory obligations.

Suggested core roles

-

Compliance lead (owner). Owns the AI Act artifacts, coordinates audits, and interfaces with regulators.

-

Product owner. Maps features to risk tiers and prioritizes feature gating against compliance work.

-

LLMOps / Platform engineer. Implements prompt versioning, logging, RAG vetting, and model deployment controls.

-

Safety / Risk engineer. Runs adversarial tests, monitors semantic metrics, and designs human oversight.

-

Privacy lead. Coordinates DPIAs, data subject requests, and GDPR interfaces.

-

Legal counsel. Interprets obligations, prepares reporting templates, and coordinates with national authorities.

Embed compliance checks into the delivery pipeline: merge requests that change prompt templates or retrieval indexes should require a compliance checklist and signoff from the safety or compliance lead.

Action: create a RACI matrix for the artifacts listed earlier; set SLAs for artifact generation (e.g., model card update within 24 hours of release).

7 — Product design patterns to reduce regulatory burden

Engineering choices can simplify compliance. Design for lower regulatory exposure when possible.

Minimize personal data usage. Use synthetic or de-identified datasets for fine-tuning; avoid using personal data unless strictly required.

Offer human-reviewed modes. For high-risk outputs, provide a “publish only after human review” toggle rather than immediate public output.

Scoped feature gating. Region-based gating: block certain features in the EU or route EU traffic to a stricter workflow until compliance artifacts are complete.

Transparent defaults. Opt-in defaults for high-impact features (e.g., generation of legal or medical drafts), and clear labels when content is AI-generated.

Action: evaluate whether feature toggles and region gating can reduce initial compliance scope and allow faster rollouts in lower-risk markets.

8 — Documentation & audit readiness: how to store and show compliance evidence

Regulatory inspectors will ask for traceable artifacts. Consider these operational choices now.

Immutable storage for logs and artifacts. Use write-once storage for critical logs with controlled retention policies and access controls to preserve chain of custody.

Versioned model & prompt registry. Keep model binaries, training hashes, prompts, and deployment manifests in a registry with immutable tags.

Automated artifact generation. Integrate document generation into CI: builds generate model cards, risk summaries, and test results automatically and publish them to an internal compliance portal.

Audit portal for regulators. Maintain a secure portal with read-only access that regulators can be given in investigations; include search and export capabilities for key logs.

Action: plan a 1-click export: regulator_name + time_range → ZIP of required artifacts (redacted as needed).

9 — Pricing, contracts, and commercial implications

The AI Act influences commercial terms and sales processes.

Contract clauses. Update contracts and Terms of Service to include: responsibility boundaries (provider vs deployer), data handling terms, audit and compliance cooperation clauses, and limitations of liability for model outputs.

Customer disclosures. Some customers will request evidence of compliance; prepare templated summaries (model card, compliance status, DPIA outcomes) that can be shared under NDA.

Insurance & SLAs. Revisit cyber and professional liability policies; insurers may require documented safety testing and incident playbooks. Define SLAs for availability and latency separately from guarantees on model correctness.

Pricing impact. Complying with the AI Act has costs: engineering, audits, documentation, support. For SaaS vendors, price and packaging should reflect increased operating costs for high-assurance features.

Action: update sales enablement with a compliance FAQ and standard NDAs for due diligence requests.

10 — Global coordination: other jurisdictions and cross-border issues

EU rules influence global practice. Vendors serving global customers must navigate multiple, sometimes overlapping laws (EU AI Act, GDPR, state-level AI bills). Key considerations:

Data residency & transfers. If training or logs contain personal data, ensure transfers comply with EDPB guidance and use appropriate safeguards for third-country transfers.

Divergent rules. Some markets (US, China) have different enforcement philosophies; adopt the EU as a “gold standard” for regulated workflows and allow regional relaxations where lawful.

GPAI obligations. If your product uses or distributes general-purpose models, monitor EU guidance and timelines for GPAI providers; responsibilities may be split across model provider and deployer.

Action: maintain a compliance matrix by jurisdiction: feature X allowed? yes/no; required artifacts; transfer constraints; and local contact person.

11 — A practical 90-day roadmap to baseline compliance

If you have AI features in production or near-production, use this 90-day sprint to reach baseline readiness.

Days 1–14: Discover & classify. Run feature inventory, classify risk per AI Act, and identify models that process personal data. Start a legal review and begin DPIA scoping where necessary.

Days 15–30: Instrumentation & logging. Implement mandatory logging fields, deploy a prompt registry, and version model binaries. Start storing artifacts in immutable storage.

Days 31–45: Documentation & risk assessments. Produce model cards, technical documentation templates, and the first round of risk assessments. Map human oversight flows.

Days 46–60: Safety & monitoring. Deploy semantic monitoring for hallucination, toxicity, and drift. Add alerting and human-review queues. Run initial adversarial tests.

Days 61–75: Incident playbooks & reporting templates. Finalize incident playbooks, pre-draft regulator reporting templates, and tabletop exercises with cross-functional teams.

Days 76–90: Contracts, sales enablement & pilot audits. Update contracts, prepare customer disclosure packages, and run a mock audit to validate artifacts and retrieval procedures.

Action: treat each weekly milestone as a deliverable — generate an artifact at the end of each sprint and present to leadership.

12 — Practical checklists and sample language

Minimal model card (one paragraph to include in UI and customer DPA):

Model name and version, intended purpose, limitations and failure modes, training data high-level summary, accuracy metrics on key tasks, contact for compliance questions, and last update timestamp.

Risk assessment template (short):

Feature description; risk category; affected user population; likelihood and impact rating; mitigations implemented; monitoring signals and thresholds; residual risk and acceptance.

Incident report skeleton (for regulators):

Timeline of events; affected cohort size; root cause hypothesis; mitigations performed; recurrence controls; contact person and next steps.

13 — Common pitfalls and how to avoid them

Treating the Act as a legal checkbox. Compliance is operational and engineering work. Avoid doc-only approaches that lack instrumentation.

Under-estimating prompt changes. Every prompt tweak can change the model’s risk profile. Use versioned prompts and require review.

Ignoring retrieval. RAG pipelines are often the weakest link — retrieval errors leak context, and indexing mistakes expose private data.

Not practicing incidents. The difference between a fast, transparent response and a slow, defensive one determines regulatory outcomes and reputational damage.

14 — Tools and vendor landscape for compliance

Vendors and open-source tools are emerging to automate many artifacts: prompt registries, model cards, semantic monitors, and incident reporting templates. Choose tools that can export the artifacts you need and integrate with your CI/CD. Look for vendors that support automated generation of model cards and provide connectors to your logging and ticketing systems.

Action: evaluate 3 tools in a 4-week pilot: one for prompt & model registries, one for semantic monitoring, and one for audit evidence management.

15 — Final recommendations (PRAGMATIC RULES)

-

Classify first: accurate scoping avoids wasted effort.

-

Instrument everything automatically: logs, model ids, prompt fingerprints.

-

Automate artifact generation: CI should produce model cards and risk summaries.

-

Embed human oversight where risk is non-negligible.

-

Run drills and be ready to report: practice incident response now.

-

Align contracts and customer communications with compliance realities.

-

Keep GDPR and data transfer concerns front and center.

Verdict

The EU AI Act is reshaping how SaaS vendors conceive, build, and operate AI features. In 2025 the law is moving from negotiation to enforcement and guidance is actively evolving. The smart vendor treats compliance as a product problem: instrumented systems that produce traceable artifacts, modular guardrails that ship with product features, and clear organizational ownership of legal and operational responsibilities. Vendors that build this capability will not simply avoid fines; they will gain market trust, reduce safety incidents, and stand out to customers who require evidence of robust governance.