TL;DR Prompt injection and similar attacks are real risks; defend with input validation, prompt separation, and runtime filters. Adopt OWASP LLM Prompt Injection cheatsheet controls and instrument model calls for anomalous behavior. Design a kill-switch, human approvals for risky actions, and rigorous logging for audits and incident response. Large language models and the agentic systems […]

Category: Security & Privacy

California’s New AI Safety Law (SB-53): What Publishers & Startups Need

Legal disclaimer: This article summarises California Senate Bill SB-53 for informational purposes only and does not constitute legal advice. Consult a licensed attorney for compliance guidance. TL;DR SB-53 (Transparency in Frontier AI Act) requires large frontier model developers to publish safety frameworks and testing information. SaaS vendors should review procurement & provider disclosures and update […]

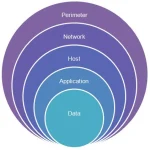

Securing LLMs: Prompt Filtering, Rate Limits & Access Controls

Large language models are already part of many products. They write help text, summarize documents, and automate workflows. That power comes with risk. A single crafted prompt can change a model’s behavior. A misconfigured connector can leak data. A runaway script can wipe out your budget. This guide explains what to fix first, how to […]