TL;DR

- Prompt injection and similar attacks are real risks; defend with input validation, prompt separation, and runtime filters.

- Adopt OWASP LLM Prompt Injection cheatsheet controls and instrument model calls for anomalous behavior.

- Design a kill-switch, human approvals for risky actions, and rigorous logging for audits and incident response.

Large language models and the agentic systems built around them have become a new focal point for attackers. In 2025 a stream of high-profile research and real-world disclosures exposed how prompt-injection, poisoned content, and connector-based attacks can be used to steal secrets, coax models into unsafe actions, or run “zero-click” exfiltration without user interaction. At the same time vendors and security teams are shipping mitigation features and product controls — from connector hardening and prompt registries to policy engines and semantic output filters — to blunt the threat. This article explains the attack patterns making headlines, why they work, and what defensive product releases and engineering controls are now required to operate LLMs safely in production.

How attackers weaponize prompts and connectors

Prompt injection remains the fundamental building block: adversaries hide instructions inside user inputs, documents, images, or retrieved content so the model treats malicious text as authoritative system guidance. That simple concept now has evolved into more sophisticated forms — “indirect” prompt injection (poisoning the knowledge store or a shared document so the model later retrieves tainted content), multimodal injections (instructions embedded in images or attachments), and chain attacks that combine a prompt-injection with an agent’s ability to call tools or follow links. These threats are not theoretical; recent academic studies and industry analyses show attackers crafting prompts that reliably override safety constraints and trigger undesired behavior.

The danger grows once models are connected to enterprise services. Two prominent, independently reported incidents illustrate the risk: a zero-click vulnerability in a widely deployed assistant (dubbed “EchoLeak”) showed how carefully crafted content could cause a Copilot instance to leak internal data without user clicks, and research presented at Black Hat (AgentFlayer) demonstrated how a single poisoned document in a cloud drive could extract sensitive files via ChatGPT Connectors. These incidents underline a hard truth: connectors and document indexing drastically expand the attack surface and turn seemingly benign files into covert exfiltration vectors.

ArXiv paper on prompt injection attacks (research primer)

Why standard web security controls are insufficient

Traditional defenses — input sanitization, URL whitelisting, or antivirus scanning — are necessary but not sufficient for LLM ecosystems. Models interpret natural language and implicitly follow embedded instructions; they have no native concept of “trusted vs untrusted prompt content” unless engineering layers enforce a separation. Moreover, RAG (retrieval-augmented generation) systems can reintroduce poisoned content long after it was indexed, creating a persistent attack surface that evolves with the data corpus. Academic work and practitioner writeups now emphasize that LLM security requires semantic monitoring, provenance, and runtime guardrails rather than purely syntactic filtering.

What vendors are shipping now

Vendors moved quickly after the flurry of disclosures. Platform-level responses fall into several product categories:

• Connector and ingestion hardening. Cloud providers and model hosts added stricter fetching policies, document rendering sanitization, and quarantines for untrusted content. Some vendors now refuse to auto-render remote content in connectors or render it only through a sanitized pipeline.

• Policy engines & scope controls. Admin consoles now offer per-connector scopes (read-only, metadata-only, deny-write), whitelists for trusted domains, and policy controls that block execution of high-risk tool calls. These controls let operators balance productivity vs risk at the organization level.

• Prompt registries & versioning. Tools that treat prompts like code — with versioning, review gates, and immutable prompt templates — are shipping as first-class product features so teams can audit who changed a safety-critical prompt and when. OWASP guidance and vendor docs recommend this approach as a baseline defense.

• Semantic output filters & detection. Observability vendors and model hosts now offer detectors for hallucination, PII leakage, and instruction compliance. Outputs can be scored and routed to human reviewers when thresholds are crossed, turning automatic responses into conditional workflows.

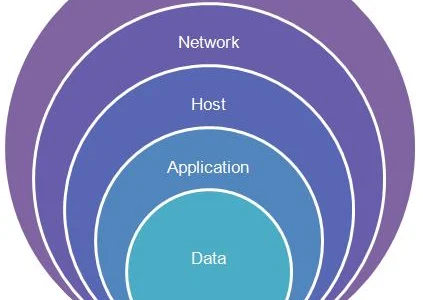

Practical defensive architecture (defense-in-depth)

A resilient LLM security posture combines product controls with engineering practice. Start by treating any external content as untrusted: isolate connectors, enforce strict scopes, and render external files in sanitized sandboxes. Instrument every model call with provenance metadata (prompt fingerprint, retrieval document ids, model version) and push those logs into an immutable audit store for fast triage. Deploy semantic monitoring for anomalies (sudden spikes in token egress, out-of-pattern reference URLs, or unusual high-confidence factual assertions) and route high-risk outputs to human-in-the-loop review. Finally, incorporate honey tokens inside private corpora to detect exfiltration attempts and run continuous red-teaming using adversarial prompt suites. OWASP’s prompt-injection cheat sheets and recent vendor advisories provide concrete patterns to operationalize these controls.

What product and security teams must do this quarter

Short actionable priorities: inventory all connectors and document stores accessible to agents; enforce least privilege and region-aware access controls; integrate prompt registries into CI so prompt changes require review; add semantic DLP (data loss prevention) checks for outputs; run a zero-click tabletop and a simulated exfiltration exercise; and upgrade SLA and contractual language to reflect new risks for enterprise deployments.

Closing note

LLM-targeted attacks are evolving quickly and so are defensive product features. The recent Wave of EchoLeak- and AgentFlayer-style disclosures made one thing clear: stitching models to live data and tools creates opportunity for powerful automation — and for stealthy attacks. The safe path forward is pragmatic: assume connected models will face adversarial inputs, invest in semantic monitoring and policy controls, and make human oversight an operational requirement for any high-risk agentic workflow. Vendors have shipped important mitigations, but the real work is at the integration layer — where product teams must bake in separation, traceability, and continuous red-teaming to keep LLMs productive and secure.

FAQs

- Q: Can prompt injection be fully prevented?

- A: No single control is foolproof. Use layered defenses: input filtering, prompt separation, runtime controls, human approvals and monitoring to reduce risk. :contentReference[oaicite:12]{index=12}

- Q: Should we stop using LLMs for sensitive tasks?

- A: Not necessarily — use stronger controls, restrict actions, and require human review for high-risk workflows. Consider on-device or isolated inference for very sensitive data. :contentReference[oaicite:13]{index=13}

- Q: Which resources should teams read to get started?

- A: Start with OWASP’s LLM Prompt Injection Cheat Sheet, IBM’s prompt injection guide, and key academic papers on prompt attacks and defenses. :contentReference[oaicite:14]{index=14}